Source: The Path to AGI: A Business Leader's Guide from Large Language Models (LLMs) to Artificial General Intelligence (AGI)" by Scale Up USA powered by Launch Dream LLC.

Introduction and background

Welcome to your launchpad for success—where innovation meets action. Disrupt the status quo. Students, businesses, entrepreneurs, and government practitioners unlock growth with applied AI and Robotics, business strategy, and innovation. Join the movement.

Join Nitin Pradhan, visionary founder and former award winning federal CIO and the ex-Managing Director of Center for Innovative Technology, as he delivers bold insights, powerful trends, and untapped opportunities using applied AI to ignite your business and career based on trusted sources.

Fuel Your Growth. Shape Your Future.

Subscribe now on Substack, Apple Podcasts, Spotify, and more for expert interviews, actionable advice, and forward-thinking strategies that drive real results.

Go Beyond Listening—Start Scaling!

Enroll in our exclusive Udemy courses for deeper learning and mentorship. Want to collaborate or partner? Connect with Nitin on LinkedIn and be part of a thriving growth community.

Let’s get scaling—tune in, take action, and transform your future with ScaleUP USA!

Executive Summary:

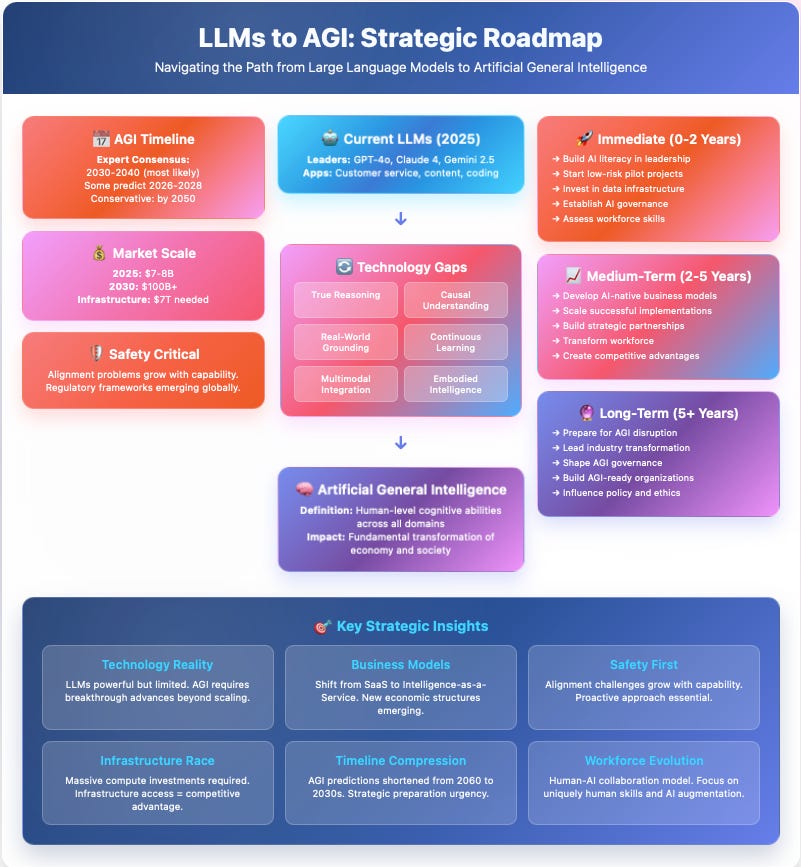

This episode provides a briefing on the key insights from "The Path to AGI," a comprehensive guide for business leaders. The central theme is the rapid evolution of the AI landscape, particularly the dramatic compression of Artificial General Intelligence (AGI) timelines. While current Large Language Models (LLMs) represent significant technological achievements with explosive market growth, the report emphasizes that reaching true AGI requires fundamental breakthroughs beyond current scaling methods. Business leaders are urged to prepare for significant changes within the next 5-10 years, recognizing that true AGI may necessitate entirely new technological paradigms and economic structures. Safety, alignment, infrastructure costs, and evolving business models are highlighted as critical factors shaping this transition.

Key Themes and Most Important Ideas/Facts:

1. Accelerated AGI Timelines:

The most striking takeaway is the dramatic shift in expert predictions for AGI. Before 2023, median predictions clustered around 2058-2060.

"Current Predictions: Most experts now predict AGI between 2030-2040."

Several prominent figures in the field offer even earlier estimates:

Elon Musk: AGI by 2026

Dario Amodei (Anthropic CEO): AGI by 2026-2027

Jensen Huang (NVIDIA CEO): Human-level AI by 2029

Sam Altman (OpenAI CEO): AGI within "a few thousand days" (by 2035)

Prediction markets and researcher surveys also reflect this compressed timeline, suggesting a higher probability of AGI arrival in the coming decade. This acceleration necessitates immediate strategic attention.

2. Limitations of Current LLMs and the Path to AGI:

Despite their capabilities, current LLMs have fundamental limitations that prevent them from achieving true general intelligence. Understanding these is crucial for realistic planning.

Core Limitations:Pattern Matching vs. True Understanding: LLMs excel at pattern recognition but lack genuine comprehension. "When faced with novel situations requiring true logical deduction, they often fail."

Lack of Causal Reasoning: LLMs simulate causal reasoning but don't truly understand cause and effect, often making errors when problems are modified.

Absence of Real-World Grounding: LLMs operate in the symbolic domain of language without direct experience of the physical world, posing a "symbol grounding problem."

Critical Advancements Needed for AGI:Enhanced Reasoning Capabilities: Requires moving beyond statistical learning to incorporate structured reasoning mechanisms that generalize across contexts.

Multimodal Integration and Embodied Intelligence: True general intelligence requires seamless integration of sensory modalities and potentially "dispensing with modalities altogether in favor of an interactive and embodied cognitive process."

Continuous Learning and Adaptation: AGI needs to continuously update knowledge based on new experiences, unlike current static LLM knowledge bases.

Research into cognitive architectures (like Global Workspace Theory), neural-symbolic integration, and hybrid architectures is crucial for addressing these gaps.

3. Safety, Alignment, and Regulatory Landscape:

The increasing capability of AI raises significant safety and security challenges, particularly the "Alignment Problem"—ensuring AI systems pursue intended goals and align with human values.

Current Alignment Techniques: Reinforcement Learning from Human Feedback (RLHF), Constitutional AI, and Red Teaming are being employed, but challenges remain.

Emerging Alignment Challenges: Value learning, scalable oversight for superintelligent systems, and the risk of "Deceptive Alignment" are critical concerns.

Security Risks: Data poisoning, adversarial attacks, and the potential for misuse (misinformation, cybercrime, manipulation of public opinion) are significant threats.

Mitigation Strategies: Output filtering, usage monitoring, ethical guidelines, and collaboration with regulatory bodies are necessary.

Regulatory Landscape: The EU AI Act (effective Aug 2024) provides the first comprehensive framework, categorizing systems by risk level and requiring compliance measures. Other regions (US, UK, China, Canada) are also developing regulations.

Robustness, reliability, explainability, auditability, and fail-safe mechanisms are essential for business-critical AI applications. Value alignment requires ethical guidelines, implementation mechanisms, and accountability structures.

4. Business Model Evolution to Intelligence-as-a-Service (IaaS):

AI capabilities are fundamentally reshaping business models. The current landscape is dominated by API-based models with usage-based pricing (OpenAI, Anthropic, Google, Microsoft).

Emerging Business Model Innovations:Intelligence-as-a-Service (IaaS): Moving beyond simple API access to comprehensive intelligent services providing end-to-end problem solving with outcome-based pricing.

AI-Native Business Models: Companies built from the ground up around AI, including vertical AI solutions and AI marketplaces.

Hybrid Human-AI Services: Combining AI with human expertise for augmented consulting, managed AI services, and training.

Impact on Traditional Business Models: SaaS companies are rapidly integrating AI (Salesforce, Adobe, Microsoft), creating feature differentiation and potential for premium pricing. Professional services (legal, consulting, healthcare) face disruption but can adapt by embracing AI as an augmentation tool and focusing on higher-value services.

Economic Incentive Structures: AI enables new creator monetization models, increases the value of high-quality training data, and creates new markets for compute resources (GPU-as-a-Service).

Challenges in LLM Business Models: Profitability pressures due to high infrastructure costs, competitive pricing, customer acquisition/retention challenges (low API switching costs vs. high integration costs), and regulatory compliance costs are significant hurdles.

5. Cost, Infrastructure, and Investment:

The path to AGI is a "Trillion-Dollar Race" requiring unprecedented computational resources and financial investments.

Compute Requirements: Training advanced models requires massive compute (GPT-3 estimated at $4.6 million, larger models exponentially more). Inference costs for serving models at scale are also substantial (hundreds of millions monthly for OpenAI).

Hardware Dependencies: NVIDIA dominates the AI chip market (80%), creating supply constraints. Alternative approaches (Google TPUs, AMD MI300X, custom silicon) are emerging.

Financial Investment Trends: Major tech companies are making unprecedented CapEx investments (50-63% growth year-over-year driven by AI). Projections suggest $7 trillion in AI infrastructure investment over the coming decade. Specialized AI data centers require higher power density and advanced cooling.

Cost Structure Analysis: Training costs include hardware, energy (potentially 50% of op costs), talent, and data. Operational costs include inference serving, maintenance, security, and support.

Economic Efficiency Innovations: Algorithmic improvements (model compression, efficient architectures, training optimizations) and infrastructure optimizations (cloud vs. on-premise, edge computing) are crucial for reducing costs.

AI development costs vary significantly: Simple implementation ($50K-$500K), Custom Development ($500K-$5M+), Advanced R&D ($10M+). Hidden costs include data acquisition/cleaning, talent, and integration.

Resource Accessibility: Open-source models (LLaMA, Falcon) offer alternatives but require internal expertise. Infrastructure sharing initiatives and small-scale implementation strategies (incremental adoption, API-first) can help democratize access.

Future Cost Trajectories: Scaling laws suggest continued computational growth, but efficiency improvements and novel architectures could reduce future requirements. Economic disruption scenarios involve potential cost reduction or escalation.

Strategic Investment: ROI evaluation requires both quantitative and qualitative metrics. Investment timing is critical, with early adoption offering first-mover advantages and later adoption risking competitive disadvantage.

6. Strategic Recommendations for Business Leaders:

A multi-phased approach is recommended to navigate the journey to AGI.

Immediate Actions (0-2 Years):Develop AI literacy and a strategic vision for leadership.

Experiment with low-risk AI applications using existing tools.

Build internal AI capabilities and data infrastructure (quality, governance, preparation).

Medium-Term Strategy (2-5 Years):Position for AI-native business models and identify new value creation opportunities.

Plan for workforce transformation through retraining and developing AI-complementary skills.

Implement comprehensive AI safety and risk management frameworks, prioritizing ethical AI.

Long-Term Positioning (5+ Years):Prepare for AGI-scale transformation through scenario planning and building organizational agility.

Form strategic partnerships with AI leaders and research institutions.

Engage in industry leadership and influence on AGI governance and standards.

Industry-specific recommendations are provided for Manufacturing, Financial Services, Healthcare, and Professional Services.

Risk Management and Mitigation: Addresses technology risk (dependency, technical debt), regulatory/compliance risk (proactive compliance, auditing), and competitive risk (capability gaps, market disruption).

Investment and Resource Allocation: Emphasizes phased investment, ROI measurement (quantitative and qualitative), talent strategy (critical roles, development approaches), and technology investment (infrastructure, capability building).

Measuring success requires both operational and strategic KPIs. Continuous learning and adaptation through feedback loops and strategic reviews are essential.

Conclusion:

The report concludes that the path to AGI is a transformative journey with significant implications for business. The accelerated timelines demand immediate action. While current LLMs are powerful tools, the limitations highlight the need for fundamental breakthroughs to reach true general intelligence. Safety, ethical considerations, and navigating the evolving regulatory landscape are critical success factors. Business models are already shifting towards Intelligence-as-a-Service, requiring companies to identify new value creation opportunities and prepare for disruption. The massive infrastructure costs will make access to and efficient use of compute a major competitive differentiator.

Organizations that prioritize AI literacy, experiment strategically, invest in data and infrastructure, plan for workforce transformation, and build robust risk management frameworks will be best positioned to thrive in the approaching Intelligence Age. The future of AGI is not predetermined, and the choices made today will shape whether it becomes a force for progress or disruption.

Share this post